I continue to think one of the hidden gem features of VMware Virtual SAN (VSAN) is it’s software defined self healing ability. I wrote about it a few months back here in: Virtual SAN Software Defined Self Healing

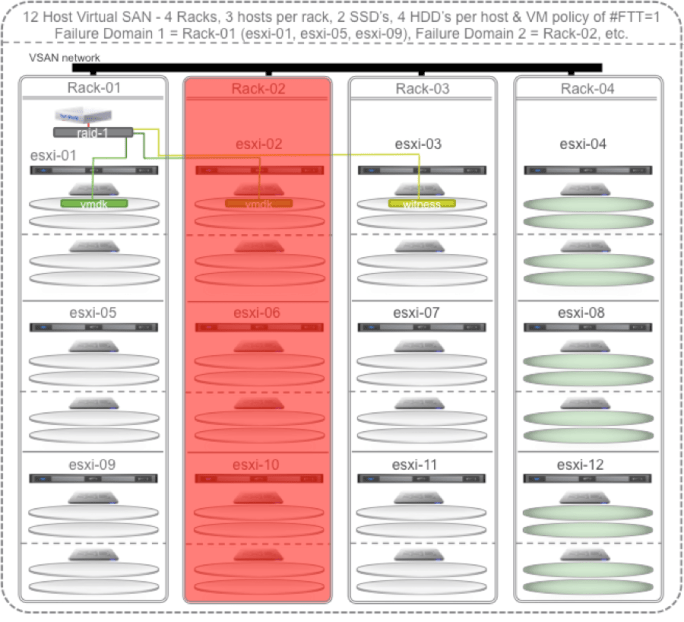

Since Virtual SAN is such a different way to do storage, it allows for some interesting configuration combinations. With vSphere 6 (built into vSphere 6), VMware will be introducing a new add-on feature for Virtual SAN called “Rack Awareness” accomplished by creating multiple “Failure Domains” and placing hosts in the same rack into the same Failure Domain. This “Rack Awareness” feature exploits the # Failures To Tolerate policy of Virtual SAN.

The rest of this post will look a lot like the previous post I did on self healing but will translate it for the Rack Awareness feature.

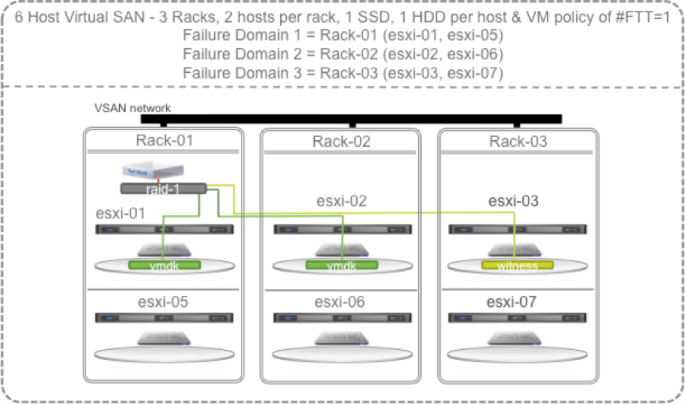

Minimum Rack Awareness Configuration

Lets start with the smallest VSAN “Rack Awareness” configuration possible that provides redundancy: a 3 rack, 6 host (2 per rack) vSphere cluster with VSAN enabled and 1 SSD and 1 HDD per host. In VSAN, an SSD constitutes a disk group so the 1 HDD is placed into a Disk Group with the 1 SSD. The SSD performs the write and read caching for the HDD’s in its disk group. The HDD permanently stores the data.

Lets start with a single VM with the default # Failures To Tolerate (#FTT) equal to 1. A VM has at least 3 objects (namespace, swap, vmdk). Each object has 3 components (data 1, data 2, witness) to satisfy #FTT=1. Lets just focus on the vmdk object and say that the VM sits on host 1 with replicas/mirrors/copies (these terms can be used interchangeably) of its vmdk data on host 1 in rack 1 and host 2 in rack 2 and the witness on host 3 in rack 3. The rule in Virtual SAN is that each of these three components of an object (data 1, data 2, witness) must sit on different hosts. With Rack Awareness, they also must be in different hosts in different racks.

OK, lets start causing some trouble. With the default # Failures To Tolerate equal 1, VM data on VSAN should be available if a single SSD, a single HDD, a single host fails, or an entire rack fails.

Continue reading “Virtual SAN 6 Rack Awareness – Software Defined Self Healing with Failure Domains”