The content of this blog post was created by a couple of colleagues of mine, David Boone and Bradford Garvey. These guys do a phenomenal job making sure VMware Virtual SAN customers get a great experience with the product by helping them plan, configure, and test VSAN. Because of this, they end up uncovering interesting information like what’s to follow.

Sometimes Cisco UCS hardware intended to be used for VMware Virtual SAN has previously been configured for other uses. In these cases, sometimes a RAID configuration has already been configured on the drives. VSAN requires the individual drives be presented to ESXi either raw via the I/O controller set in Pass-Through Mode (See: How To Configure the Cisco 12G SAS Modular Raid Controller for Pass-Through Mode) or disks set in their own RAID 0 disk groups. Best practice is to set the I/O Controller in Pass-Through Mode (Enable JBOD). However, if a RAID configuration previously existed, on the Cisco UCS platform there are a few extra steps to complete after enabling JBOD mode for the controller.

If drives were already configured as RAID virtual devices, delete the RAID configuration from the drives. One way to do that is to Clear the entire VD configuration:

Clear the entire VD configuration

- Log into the Cisco UCS Manager

- Open a console to the host

- Reboot the host

- On boot up hit Ctrl+R to enter the Cisco 12G SAS Modular Raid Controller BIOS Configuration Utility

- Hit Ctrl-N until the “VD Mgmt” page is selected

- In the “VD Mgmt” screen, navigate to the controller, and press the F2 key.

- Navigate to “Clear Configuration” and press Enter. You should see this popup:

- Press “Yes” to delete all the virtual drives

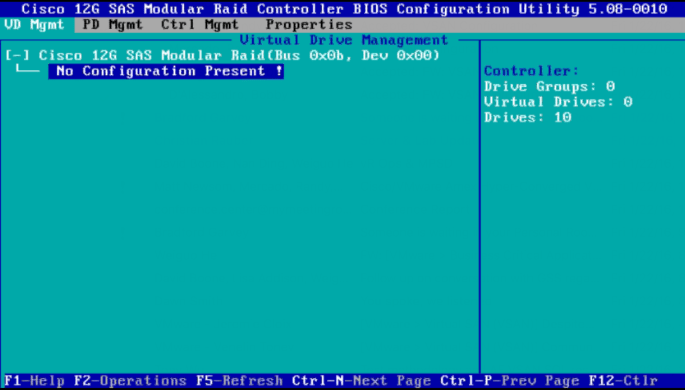

Drives will then be in an “Unconfigured Good” state. They might look something like this:

If you see this, these 10 drives are in an “Unconfigured Good” state. They need to be converted to a JBOD state.

There are two options. You can convert a bunch of Unconfigured Good drives to JBOD drives (from the “VD Mgmt” screen) or you can convert a particular Unconfigured Good drive to a JBOD drive (from the “Drive Management” screen)

Option 1: Convert a bunch of Unconfigured Good drives to JBOD drives

Perform the following steps to convert a bunch of Unconfigured Good drives to JBOD drives:

- In the “VD Mgmt” screen, navigate to the controller and press the F2 key.

- Navigate to “Make JBOD”, and press Enter.

The “Convert Unconfigured Good to JBOD” dialog appears, which shows all Unconfigured Good drives in the system.

- Select the Unconfigured Good drives which you want configured as JBODs for VSAN.

To select or deselect all the Unconfigured Good drives at one go, select the topmost square backets in the “Unconfig good drives” box. - Press “OK”.

The selected Unconfigured Good drives are converted to JBOD drives.

Option 2: Convert a particular Unconfigured Good drive to a JBOD drive

Perform the following steps to convert a particular Unconfigured Good drive to a JBOD drive:

- In the “Drive Management” screen, navigate to an Unconfigured Good drive, and press the F2 key.

- Navigate to “Make JBOD”, and press Enter.

- Press “OK” in the message confirmation box to continue.

After converting all the 10 drives above to JBOD, the screen looks like this:

Result

After rebooting, the BIOS will report all 10 drives and ESXi will see all of them in a JBOD (Pass-Through) configuration, with all the benefits of JBOD like being able to retrieve S.M.A.R.T.S. info from the physical drives.

The information obtained to create this post was gathered from the Avago – 12Gb/s MegaRAID® SAS Software – User Guide

Thanks again to David Boone and Bradford Garvey for providing this information.