On Friday, March 18 I took the opportunity to watch the live Webcast of Storage Field Day 9. If you can carve our some time, I highly recommend this.

Tech Field Day@TechFieldDay

VMware Storage Presents at Storage Field Day 9

The panel of industry experts ask all the tough questions and the great VMware Storage team answers them all.

| Storage Industry Experts |

VMware Virtual SAN Experts |

- Alex Galbraith @AlexGalbraith

- Chris M Evans @ChrisMEvans

- Dave Henry @DaveMHenry

- Enrico Signoretti @ESignoretti

- Howard Marks @DeepStorageNet

- Justin Warren @JPWarren

- Mark May @CincyStorage

- Matthew Leib @MBLeib

- Richard Arnold @3ParDude

- Scott D. Lowe @OtherScottLowe

- Vipin V.K. @VipinVK111

- W. Curtis Preston @WCPreston

|

- Yanbing Le @ybhighheels

- Christos Karamanolis @XtosK

- Rawlinson Rivera @PunchingClouds

- Vahid Fereydouny @vahidfk

- Gaetan Castelein @gcastelein1

- Anita Kibunguchy @kibuanita

|

The ~2 hour presentation was broken up into easily consumable chunks. Here’s a breakdown or the recoded session:

In this Introduction, Yanbing Le, Senior Vice President and General Manager, Storage and Availability, discusses VMware’s company success, the state of the storage market, and the success of HCI market leading Virtual SAN in over 3000 customers.

Christos Karamanolis, CTO, Storage and Availability BU, jumps into how Virtual SAN works, answers questions on the use of high endurance and commodity SSD, and how Virtual SAN service levels can be managed through VMware’s common control plane – Storage Policy Based Management.

Christos continues the discussion around VSAN features as they’ve progressed from the 1st generation Virtual SAN released in March 12, 2014 to the 2nd, 3rd, and now 4th generation Virtual SAN that was just released March 16, 2016. The discussion in this section focuses a lot on data protection features like stretched clustering and vSphere Replication. They dove deep into how vSphere Replication can deliver application consistent protection as well as a true 5 minute RPO based on the built in intelligent scheduler sending the data deltas within the 5 minute window, monitoring the SLAs, and alerting if they cannot be met due to network issues.

Deduplication, Compression, Distributed RAID 5 & 6 Erasure Coding are all now available to all flash Virtual SAN configurations. Christos provides the skinny on all these data reduction space efficiency features and how enabling these add very little overhead on the vSphere hosts. Rawlinson chimes on the automated way Virtual SAN can build the cluster of disks and disk groups that deliver the capacity for the shared VSAN datastore. These can certainly be built manually but VMware’s design goal is to make the storage system as automated as possible. The conversation moves to checksum and how Virtual SAN is protecting the integrity of data on disks.

OK, this part was incredible! Christos laid down the gauntlet, so to speak. He presented the data behind the testing that shows minimal impact on the hosts when enabling the space efficiency features. Also, he presents performance data for OLTP workloads, VDI, Oracle RACK, etc. All cards on the table here. I can’t begin to summarize, you’ll just need to watch.

Rawlinson Rivera takes over and does what he does best, throwing all caution to the wind and delivering live demonstrations. He showed the Virtual SAN Health Check and the new Virtual SAN Performance Monitoring and Capacity Management views built into the vSphere Web Client. Towards the end, Howard Marks asked about supporting future Intel NVMe capabilities and Christos’s response was that it’s safe to say VMware is working closely with Intel on ensuring the VMware storage stack can utilize the next generation devices. Virtual SAN already supports the Intel P3700 and P3600 NVMe devices.

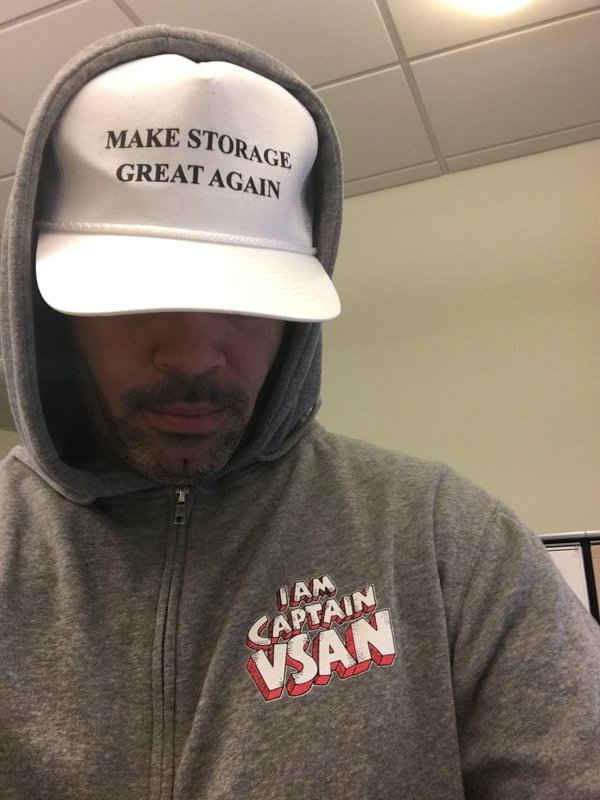

This was such a great session I thought I’d promote it and make it easy to check it out. By the way, here’s Rawlinson wearing a special hat!